Having a user tap to an audio signal will give you the sum of audio latency + input latency. Unfortunately, we can never measure a single type of latency by itself using a tap test. The standard way to measure and adjust for latency is through some sort of tap test (tap to the beat, or tap to the visual indicator), or by adjusting a video/audio offset. (One notable exception to this generalization would be when playing on a video projector or something like that.) And these systems do not require the same sort of mixing and buffering systems that audio does.

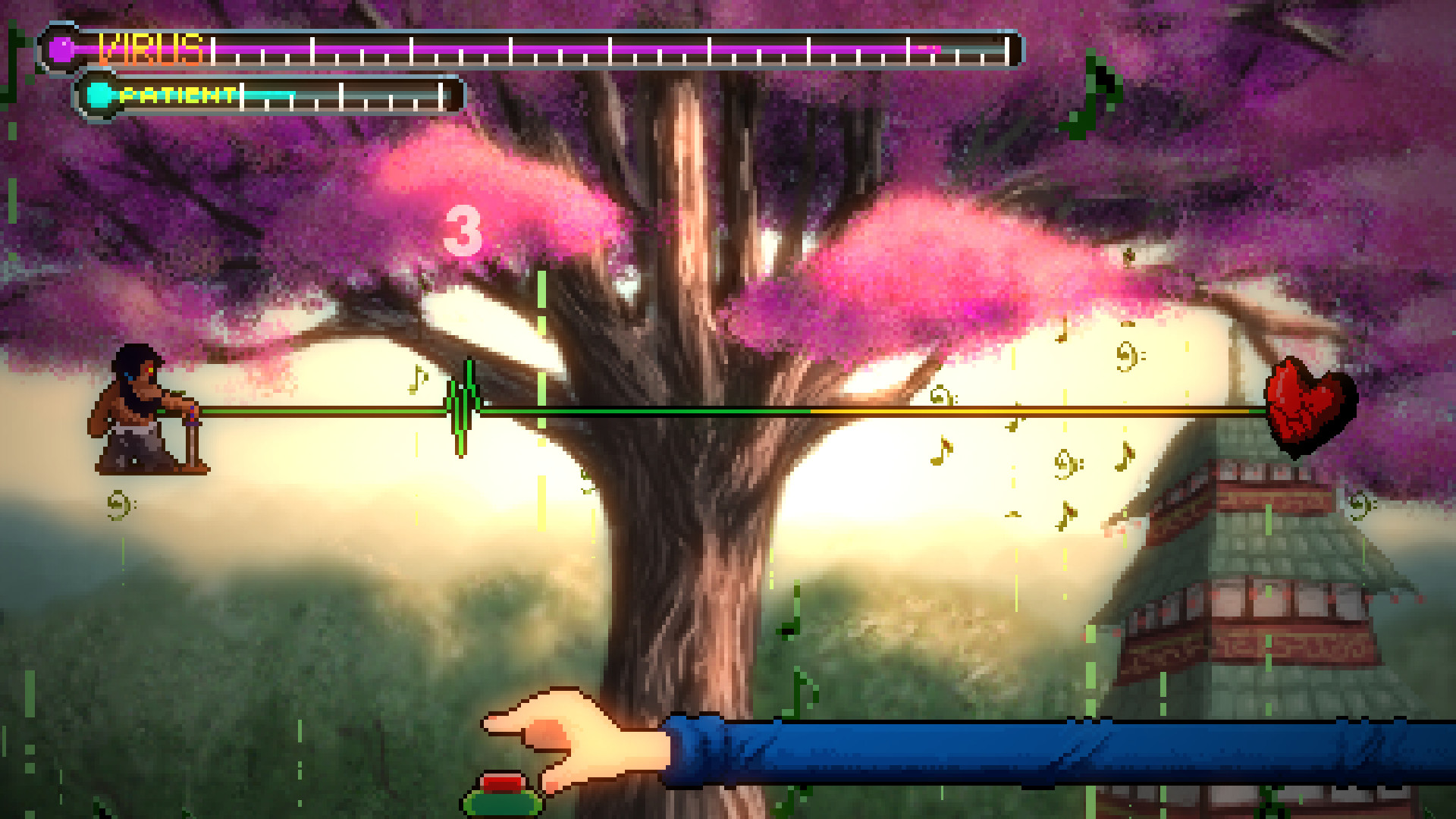

Rhythm doctor samurai android#

(This is especially true on Android devices which are notorious for having high amounts of audio latency) Input and video latency are already optimized for most other games: if pressing a button does not result in an immediate visual feedback, games feel very unresponsive. Note that usually, audio latency is the largest of the three latencies. For example, bypassing Unity's audio mixing and input processing systems will result in much lower latencies.but of course you lose out on those features (unless you re-implement them yourself). Trying to minimize these latencies usually involves adjusting various engine settings, and past that, going low-level and bypassing engine functionality entirely to interface directly with the low-level platform APIs. This is caused by input debouncing / processing delays, frame-based input handling, wireless controller transmission times, other stuff in the engine, etc. Input latency is the delay between a player performing an input and when that input is actually able to be handled by the game. This is caused by double-buffering/rendering queue systems, monitor refresh/update characteristics, etc. Visual latency is the delay between rendering an image and when that image is actually able to be seen.

Rhythm doctor samurai Bluetooth#

This is caused by various audio buffering systems, mixing delays, hardware/engine limitations, bluetooth headphone transmission time, the time it takes for sound to travel through the air, etc. Thus, we need to build some sort of latency calibration system for the player to be able to easily adjust this for themselves.Īctually, there are three separate types of latency that are relevant to us for synchronizing music with gameplay: audio latency, visual latency, and input latency.Īudio latency is the delay between playing a sound and when the sound is actually able to be heard. Unfortunately, the exact latency amount is different from device to device, so there is no universal measurement that works (plus, different players may perceive latency differently due to psychoacoustics, etc). Paige and Nicole are also my favorites but ummmm yeah, I'm really hoping the devs can give Ian his own little level someday, hopefully something along the lines of "One Shift More", especially with him being the creator of the whole rhythm defibrillation, I can see a lot of story potential in him.As mentioned briefly in a previous devlog, audio output always has some amount of latency/delay on every device and it's important that we be able to measure this so that we can "queue up" sound/music in advance to account for this delay. I also love that in most of the tutorials that he is featured in (assuming you are doing it correctly), he reassures the player by giving positive feedback such as "Nice!","Good!", "There you go!", and "G-great!" (at least he tends to give more than Paige does in my opinion). I love his personality: he seems to care deeply to those around him but can also a bit "blunt"/emotionally aloof (as seen in the beginning cut-scene of the game, 1-2N, 1-XN, and one of my favorites 4-1N [where Ian will comment "I think you missed it." or "Whoops, not like that." depending how you mess up the tutorial.

Tbh, I have a very soft spot in my heart for Ian (and i'm very surprised to see no one else talk about him).

0 kommentar(er)

0 kommentar(er)